Connect idle PCs with NAT Traversal and automatically allocate resources with autoscaling to reduce costs.

-Providing automated operational infrastructure for stable operation, performance optimization, and cost efficiency of AI models.

After completing pilot testing, the service will officially launch next year, and Series A investment is expected in the first half of 2026.

The core of AI service implementation is training and inference. While training is the process of training an AI model to recognize patterns and identify correlations in large-scale data, inference is the process by which the trained model generates answers to user questions and the actual operation of the service.

Developing AI services requires massive data sets and high-performance computing. Data centers must be equipped with thousands or even tens of thousands of GPUs and a high-bandwidth network to optimize inter-GPU communication. GPUs cost over 15 million won per unit, sometimes exceeding 20 million won. These enormous infrastructure costs, particularly the high cost of purchasing and operating GPUs, are a key factor preventing most companies providing AI services from achieving profitability.

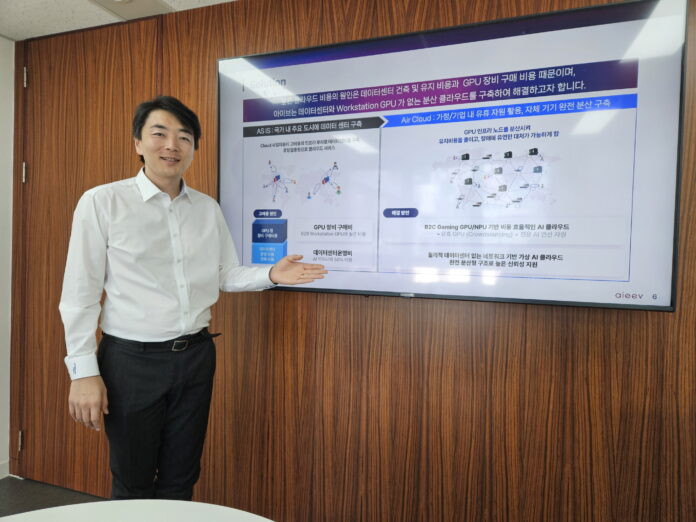

There's a company that lowers infrastructure costs for companies providing AI services. While model development requires high-end GPUs, inference relies on repeating only predefined tasks from a completed model, so even low-end GPUs are sufficient. Recognizing this, aieev (aieev) dramatically reduced GPU costs by building a distributed virtual cloud infrastructure that connects idle PCs in homes and PC cafes to provide the GPU resources needed for inference.

Park Se-jin, CEO of aieev, explained, "Developing large language models requires thousands or tens of thousands of GPUs, but inference services require just a few. Lowering the cost of building AI infrastructure will enable more people to access more AI services."

CEO Park founded aieev in early 2024 after discovering that AI service companies were suffering from a GPU shortage due to the large use of GPUs for coin mining.

CEO Park earned his Ph.D. in systems software from POSTECH and currently serves as an adjunct professor in the Department of Computer Science and Engineering at Keimyung University. The aieev team consists of system software specialists from POSTECH, KAIST, and Korea University, most of whom have over 15 years of industry experience.

Since its founding, aieev has filed for ten patents, secured two commercial customers, and is currently conducting proof-of-concept (PoC) trials with ten companies. Last August, it was selected for SK Telecom's "AI Startup Accelerator 3rd Batch." To commercialize its technology, the company plans to pursue a Series A investment in the first half of 2026.

We met with CEO Park Se-jin at aieev's Gangnam office to learn about how idle GPUs are connected, how distributed GPUs operate stably, and aieev's vision for reducing AI infrastructure costs.

Connecting personal computers around the world

So how do we connect the idle GPU?

Typically, personal computers or computers in PC cafes don't have public IP addresses, making them inaccessible to outsiders. How can you connect to a PC that's inaccessible?

aieev connects to a personal computer via NAT traversal technology. When the desired personal computer requests a connection to the aieev server, the router automatically opens a connection, allowing aieev to control the PC's GPU. If this method fails, the connection is made via an intermediate server (relay server). Aieev has completed technical testing, connecting over 100,000 nodes.

Eliminating the need for a data center directly translates into cost competitiveness. In traditional data centers, 50% of electricity costs go to cooling. Because so much equipment is packed into such a small space, it generates enormous amounts of heat.

"The staff needed to build, operate, and maintain data centers alone is quite large. Aieev eliminates the need for dedicated staff for security, operations, and maintenance."

Connectivity isn't the end of the story. Managing a large-scale network requires efficient communication and fault isolation between each node.

"Managing numerous nodes requires more than just a simple network connection. Efficient monitoring of the status of each node is essential, and if a node fails, the workload must be quickly transferred to another node."

Cost savings through stable operation and automatic allocation technology.

What is important in a distributed system is service stability.

Operating AI services requires numerous open source libraries, and if any of them are missing or have incompatible versions, the service will not function properly. To address this issue, aieev developed container technology. Container technology bundles all the open source libraries, system settings, and dependencies required to run AI models into a single package, enabling stable model execution in a consistent environment, anywhere.

Large cloud services and data centers centralize all services in one location. Therefore, if a network issue or power outage occurs in the data center, the entire service can be disrupted. Therefore, redundancy must be configured and an automatic recovery system must be in place to prevent service interruption even if a single server fails.

Aieev fully automates this entire process. Simply upload a model to the Aieev platform, and all subsequent AI service-oriented processes, including performance monitoring, redundancy configuration, and automatic recovery, are handled automatically. Even if a problem occurs on one node, the internal scheduler immediately switches the workload to another node, so users don't perceive any logical disruption.

Even if a failure occurs on one node, the internal scheduler automatically switches the task to another node and executes it. From the user's perspective, there's a physical failure, but logically, the failure is undetectable.

Another important feature is autoscaling. In typical cloud environments, servers must be provisioned in advance to meet anticipated traffic. The problem lies in the impossibility of predicting the volume of users. Therefore, resources are conservatively secured in advance. Even if it costs more, service interruption is far more detrimental.

Efficiently allocating resources based on usage can lead to significant cost savings. aieev solves this problem with autoscaling. As traffic increases, the required nodes and containers are automatically scaled up, and as traffic decreases, they are automatically scaled down. Just as more staff are deployed during peak customer activity and fewer during slow periods, resources can be automatically scaled up and down based on traffic. This translates into significant cost savings. Users can adjust the sensitivity to control the scaling rate.

"You can adjust the automatic scaling rate based on your business needs. For one customer, this autoscaling technology enabled cost savings of approximately 80% compared to traditional cloud services."

aieev provides an automated operational infrastructure that ensures stable operation, performance optimization, and cost efficiency for AI models. This means even startups can immediately achieve infrastructure stability comparable to that of large enterprises.

From startups to large corporations

aieev is currently conducting a pilot test in collaboration with a company that manages PC cafes nationwide. Upon completion, the company will be able to utilize idle GPUs distributed across approximately 4,000 PC cafes nationwide to provide computing resources to AI service companies. Discussions with companies are also active. In particular, demand from companies requiring small-scale AI resources is steadily increasing, and these companies are expected to become aieev's primary target customers.

- Low-cost inference clouds: Air Cloud and Air Container

aieev's 'Air Cloud' is a low-cost AI inference platform that can replace AWS. It is offered in two options to suit customer needs.

Air Cloud Standard operates by crowdsourcing idle GPUs from personal PCs and PC cafes. Prioritizing cost-effectiveness, it allows startups and developers to test and deploy AI models at minimal cost. Air Cloud Plus is designed for businesses requiring enterprise-grade stability and performance. It is a distributed cluster comprised of proven, custom-built nodes and guarantees 99.99% availability, ensuring the stable operation of even mission-critical AI services.

Customers are dynamically allocated the number of GPUs they need based on their traffic and are billed based on the time they use. This allows them to flexibly respond to unpredictable traffic fluctuations. Furthermore, aieev's dashboard allows them to monitor the performance and status of API endpoints in real time and analyze detailed logs. This allows customers to verify that their AI services are functioning properly at any time and quickly respond to issues when necessary.

- OpenAI-compatible AI API service 'Air API'

'Air API' is a generative AI interface that developers can immediately integrate into their services. AI functions like ChatGPT can be implemented simply by calling the API, without building complex AI models.

Air API is billed per call, allowing businesses to pay only for what they actually use. Air API is a completely serverless AI solution, allowing customers to focus solely on business logic without worrying about infrastructure management or server operations. It automatically scales as traffic increases and scales back as it decreases.

Air API aims to officially launch in 2026. After launch, it plans to support various Korean LLM-based models.

- Private Air Cloud: Optimizing Idle GPUs for Enterprises

Private Air Cloud is a solution that manages GPU resources scattered across large corporations as a single, integrated platform. Since each department or team acquires GPUs based on their needs, efficient company-wide operations are difficult. Private Air Cloud fundamentally solves this problem. Just as Air Cloud aggregates GPUs distributed around the world, Private Air Cloud can manage distributed GPUs within a company in real time and dynamically allocate them to the departments that need them.

AI for everyone

The name aieev is an acronym for AI Equality, Everyone's Value. It embodies the meaning of AI equality and everyone's value.

"I believe the information gap will continue to widen. The gap in information accessibility, though invisible, will deepen. Some people use ChatGPT for free, while others pay 300,000 won per month for ChatGPT Plus. There's a significant difference in the information accessibility between these two."

Park's concerns are becoming reality. As AI services expand across all industries, the gap between companies with access to AI and those without is widening.

"I believe this gap should disappear. Everyone should be able to enjoy the benefits of AI. aieev aims to create a world where everyone can use AI without burden. To achieve this, infrastructure costs must decrease."

Beyond simply creating low-cost services, aieev is attempting to bridge the gap in society through technology. To achieve this, infrastructure innovation is essential. If aieev's challenge is successful, the landscape of AI usage will be radically transformed in a few years.

You must be logged in to post a comment.